What is Hadoop Pig?

Hadoop Pig is the programming language, supported by the Hadoop framework, to process large data sets present in HDFS. Pig uses Pig Latin language for processing.

Why there is need of Pig language?

The Big Data processing has made many advances since it was developed. The MapReduce programming has a modest design which breaks work down and recombines it in a series of parallelizable operations making it incredibly scalable. Since MapReduce expects hardware failures, it can run on inexpensive commodity hardware, sharply lowering the cost of a computing cluster.

However, although MapReduce puts parallel programming within reach of most professional software engineers, developing MapReduce jobs isn’t easy:

- They require the programmer to think in terms of “map” and “reduce”

- N-stage jobs can be difficult to manage.

- Common operations (such as filters, projections, and joins) and rich data types require custom code.

Thus, Apache Pig was developed. Which automates the MapReduce low-level details handling and provide a high-level MapReduce architecture to the users.

How does Pig work?

Every time a Pig Latin statement or the Pig script is executed, inside it is transformed into a Map Reduce program and run above HDFS.

What is the difference between pig and sql?

Pig latin is procedural version of SQl. pig has certainly similarities, more difference from sql. Sql is a query language for user asking question in query form. sql makes answer for given but don’t tell how to answer the given question. suppose, if user want to do multiple operations on tables, we have write multiple queries and also use temporary table for storing, Sql is support for subqueries but intermediate we have to use temporary tables, SQL users find subqueries confusing and difficult to form properly.

Using sub-queries creates an inside-out design where the first step in the data pipeline is the innermost query. pig is designed with a long series of data operations in mind, so there is no need to write the data pipeline in an inverted set of subqueries or to worry about storing data in temporary tables.

How Pig differs from MapReduce?

In mapreduce, groupby operation performed at reducer side and filter, projection can be implemented in the map phase. pig latin also provides standard-operation similar to mapreduce like orderby and filters, groupby etc. We can analyze pig script and know data flows and also early to find the error checking. pig Latin is much lower cost to write and maintain than Java code for MapReduce.

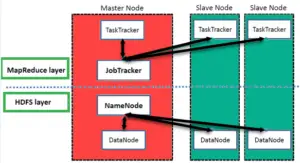

Explain Pig Architecture?

To convert Pig Latin statements to MapReduce code, Pig Architecture comes into action. It will compile and execute a pig script.

- Logical Plan

- Physical Plan

- MapReduce Plan

What are the different modes available in Pig?

Two modes are available in the pig.

- Local Mode (Runs on localhost filesystem)

- MapReduce Mode (Runs on Hadoop Cluster)

What are the different execution mode available in Pig?

There are 3 modes of execution available in pig

- Interactive Mode (Also known as Grunt Mode)

- Batch Mode

- Embedded Mode

What are the advantages of pig language?

The pig is easy to learn: Pig is easy to learn. it overcomes the need for writing complex MapReduce programs to some extent. Pig works in a step by step manner. So it is easy to write, and even better, it is easy to read.

It can handle heterogeneous data: Pig can handle all types of data – structured, semi-structured, or unstructured.

Pig is Faster: Pig’s multi-query approach combines certain types of operations together in a single pipeline, reducing the number of times data is scanned.

Pig does more with less: Pig provides the common data operations (filters, joins, ordering, etc.) And nested data types (e.g. Tuples, bags, and maps) which can be used in processing data.

Pig is Extensible: Pig is easily extensible by UDFs – including Python, Java, JavaScript, and Ruby so you can use them to load, aggregate and analysis. Pig insulates your code from changes to the Hadoop Java API.

What are the basic steps to writing a UDF Function in Pig?

- Define a class that extends EvalFunc<Class DataType>

- Override the public String exec (Tuple input) method and add requisite business logic

- Create Jar file for that class

- Register JarFilename;

- Write The Pig Script which uses the UDF created (UDF should be referred using fully qualified path i.e. PackageName.Classname)

- Execute the Pig script

What are the primitive data types in pig?

- Int

- Long

- Float

- Double

- Char array

- Byte array

What are the different functions available in pig latin language?

Pig latin has many data analysis functions. Below are some of the categorization

- Math Functions

- Eval Functions

- String Functions

What are the different math functions available in pig?

- ABS

- ACOS

- EXP

- LOG

- ROUND

- CBRT

- RANDOM

- SQRT

What are the different Eval functions available in pig?

- AVG

- CONCAT

- MAX

- MIN

- SUM

- SIZE

- COUNT

- COUNT_STAR

- DIFF

- TOKENIZE

- IsEmpty

What are the different String functions available in pig?

- UPPER

- LOWER

- TRIM

- SUBSTRING

- INDEXOF

- STRSPLIT

- LAST_INDEX_OF

What are the different Relational Operators available in pig language?

- Loading and Storing

- Filtering

- Grouping and joining

- Sorting

- Combining and Splitting

- Diagnostic